Artificial Intelligence Bedtime stories from a sweaty Prince Andrew.

I had a molar tooth extracted yesterday evening. I have spent the better part of 24 hours lying in agony and cursing dentists everywhere. Fortunately, I could distract myself with a machine learning program called Midjourney. It describes itself as “An independent research lab. Exploring new mediums of thought. Expanding the imaginative powers of the human species.” Alongside Dall-e, it is one of a few new computer vision programs which allows you to generate images from written detailed descriptions.

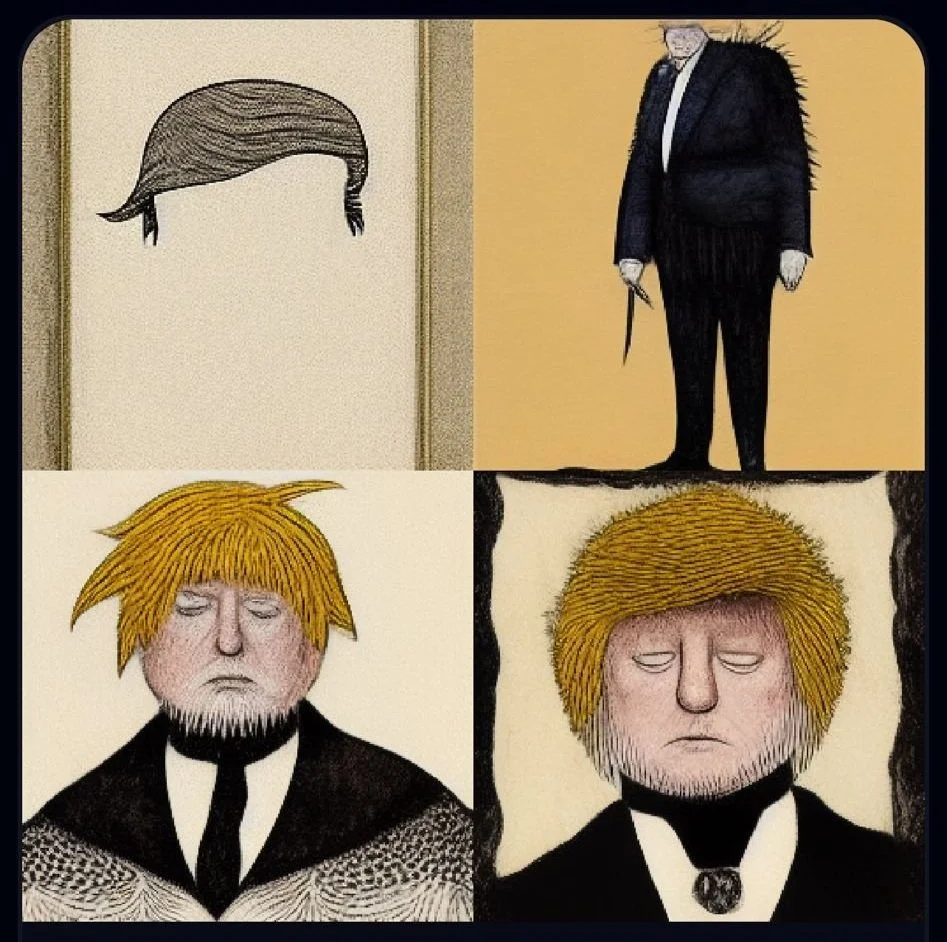

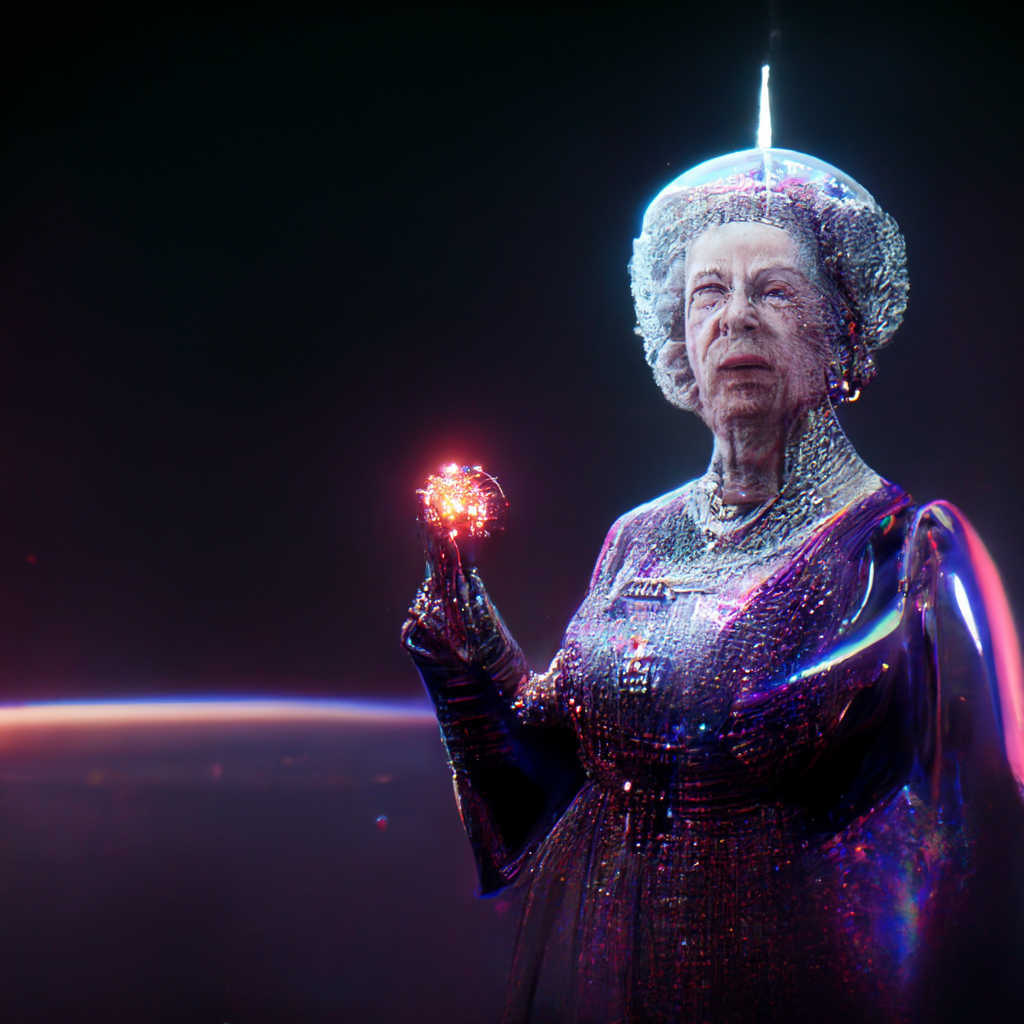

My friend sent me a link where you can sign up with the Midjourney discord server and get a few free tries. He also sent me a generated image of Donald Trump in the illustration style of Edward Gorey, twenty-six images of Prince Andrew sweating whilst reading books to children, three images of Queen Elizabeth rendered as a living tumour and several images of Boris Johnson rendered as a beef steak. “Good chance I’ll get banned soon,” he added.

“Gumiho in a Hieronymus Bosch style” - Made with Midjourney

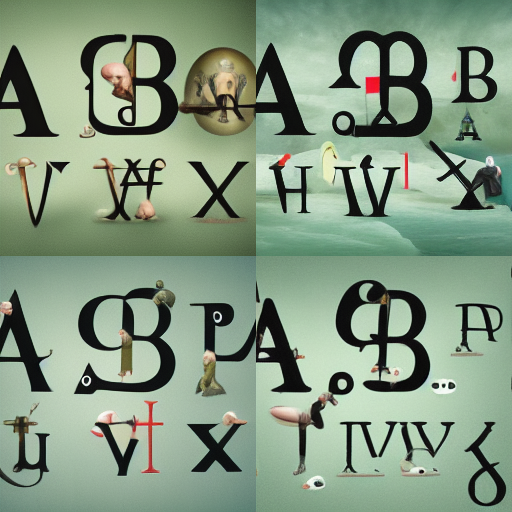

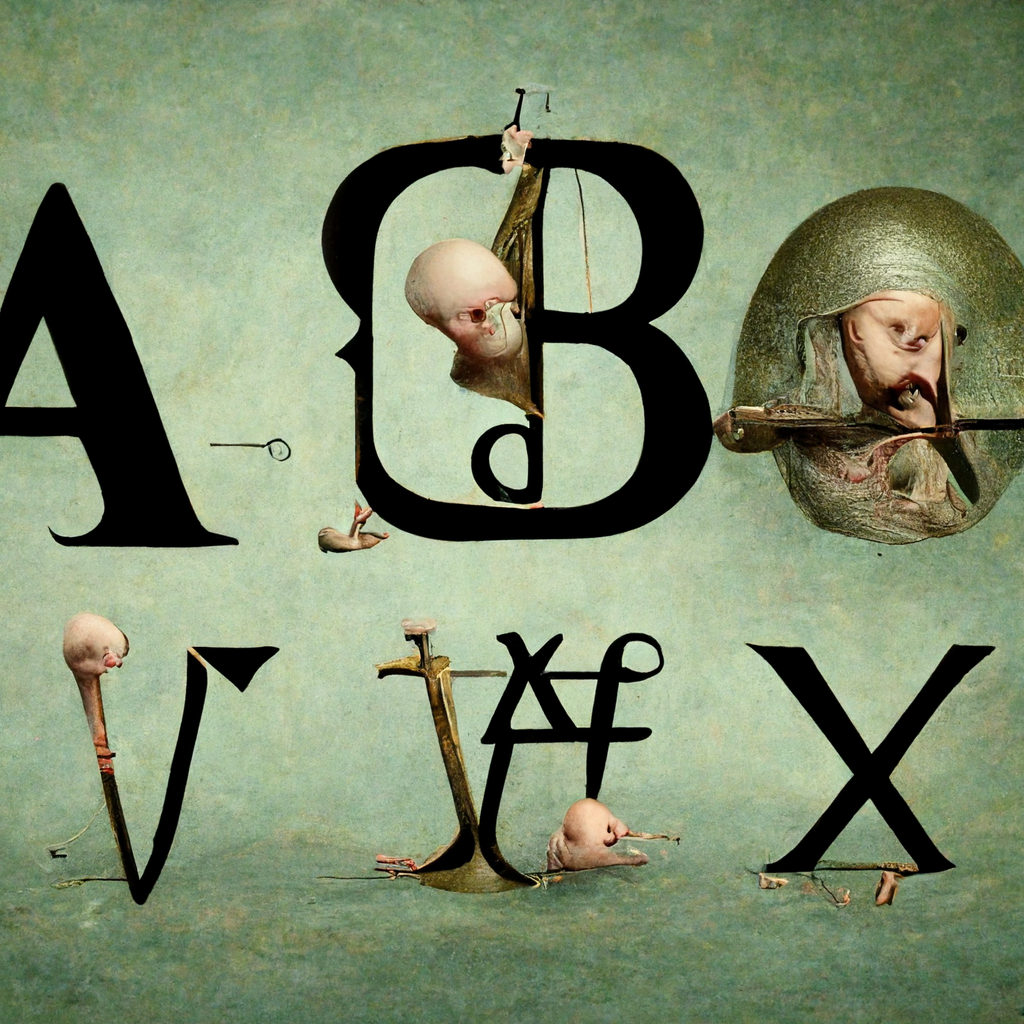

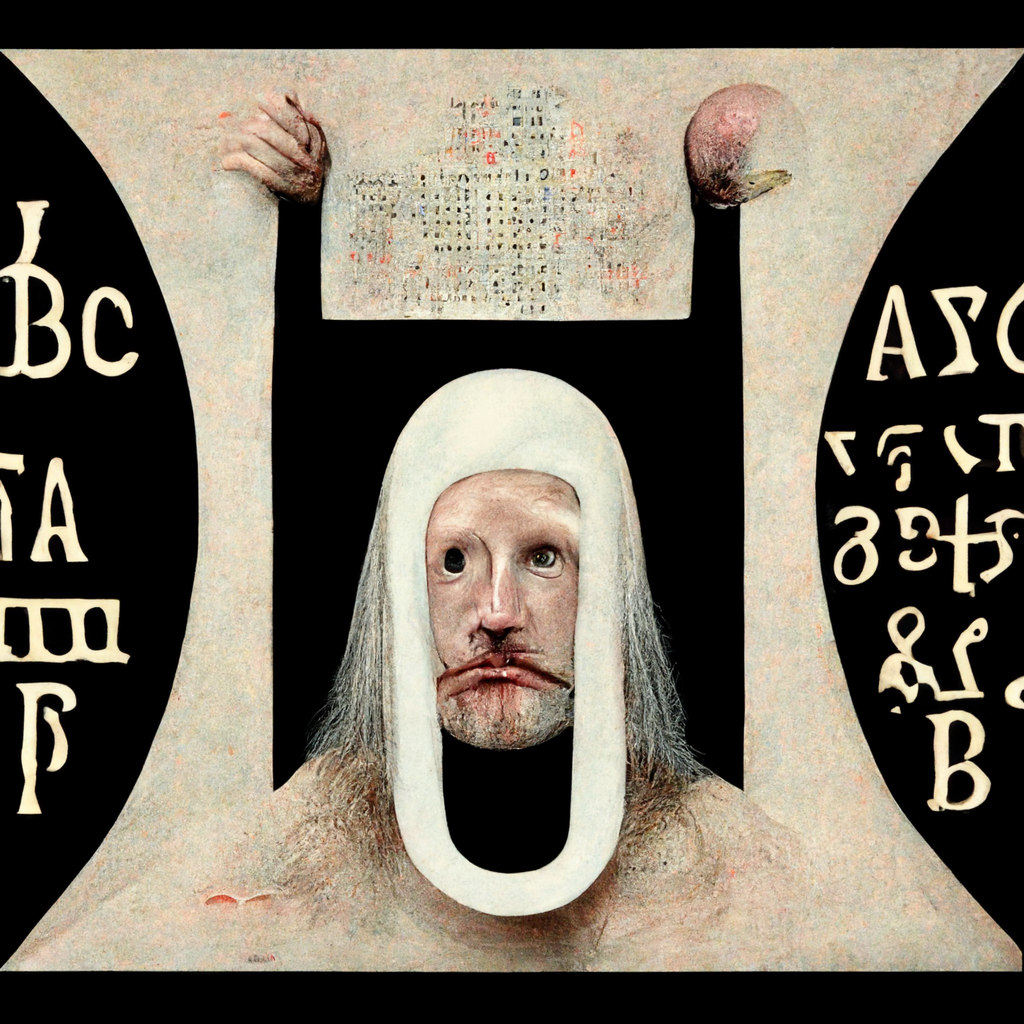

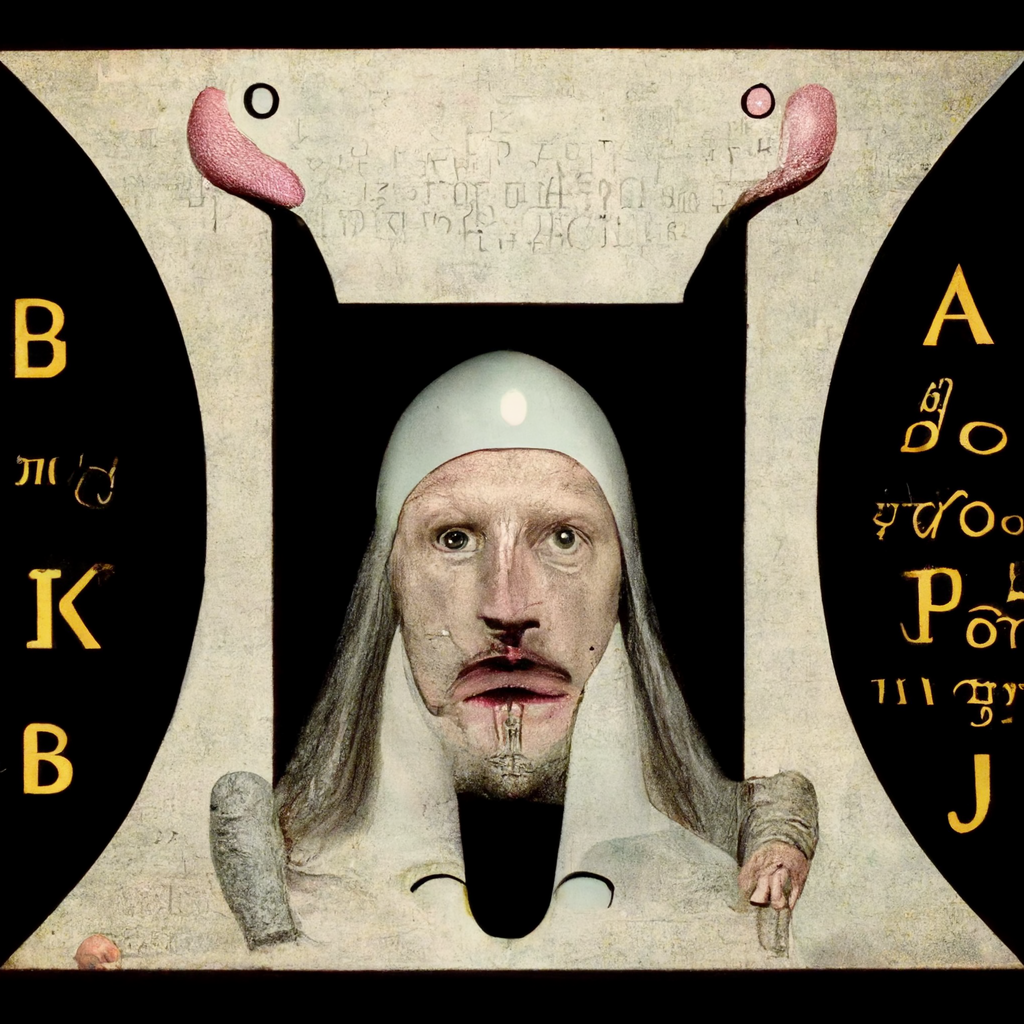

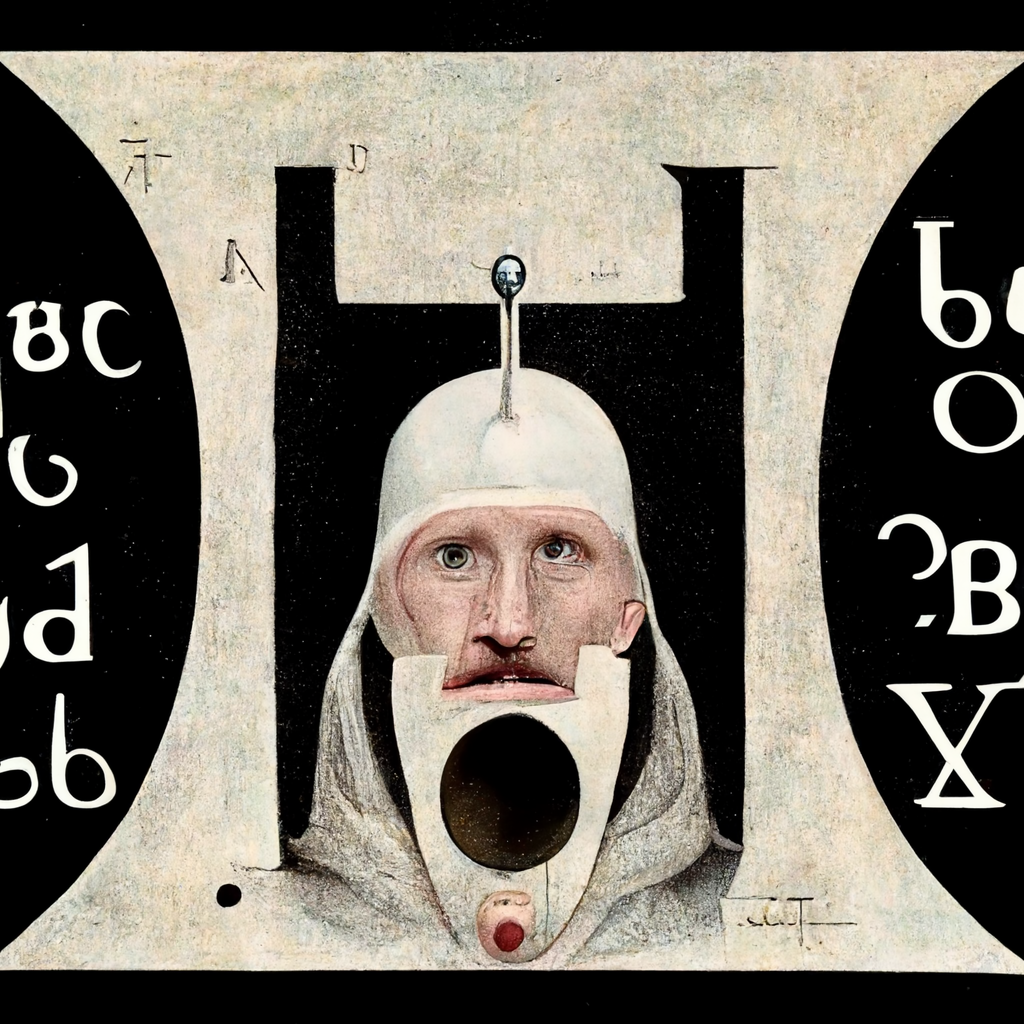

I logged in and started playing. These days I’ve been thinking about creating my own branded typeface, so I let the machines have a stab at it. I threw together a bunch of things I like: “futuristic and mediaeval alphabet in a Hieronymus Bosch and Matthew Barney style.” Midjourney did not disappoint. It generates images in blocks of four (below). The two on the left were my favourite and I clicked to have more variations of these generated:

“Futuristic and mediaeval alphabet in a Hieronymus Bosch and Matthew Barney style” - Made with Midjourney

The first is more alphabet-based and incorporates wonky mediaeval characters alongside red crosses, eccentric tool-like forms and dali-esque surreal blobs of flesh which are draped over the characters and splattered on the floor. The top-left has a beautiful bubble form which reminds me of Bosch, so I rendered that in high quality:

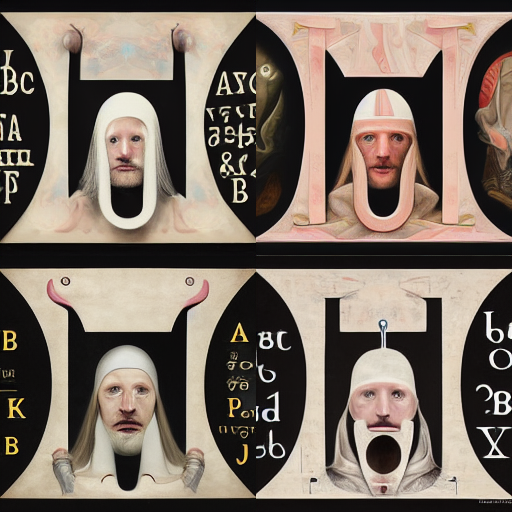

The second veers more towards Matthew Barney’s character based work, presenting gaunt portraits of characters who could either be battling dragons, or blasting off into space. Between the figures are black mirrors, or deep wells, with illuminated letters emerging. It’s a seamless matching of past and future, which reminds me of the tv show “Raised by Wolves”. I rendered all four in high quality:

Next I tried to manifest some Korean folk characters I’ve become interested in - the Gumiho, or the fox with nine tails (left), and the Ureongi Gaksi, or snail bride (right). I stuck with the Hieronymus Bosch style:

I rendered all kinds of variations of the Gumiho because I was captivated by the beauty of the rendering. The story follows a fox who is desperate to transform into a human and is told she must eat the livers of 100 men to succeed. However, having successfully eaten 99 livers, she falls in love with the 100th man she meets and cannot kill him. She is therefore unable to transform into a human and is killed by the villagers. I think these portraits really betray the juxtaposition of brutality and vulnerability which the Gumiho represents:

I also tried to generate a Taiyo Matsumoto style Gumiho, but it didn’t move me so much:

“Gumiho in a Taiyo Matsumoto style” - Made with Midjourney

I tried a couple with Bjork; one fighting a snail, another just hanging out:

I also generated “Queen Elizabeth as a futuristic space wizard”:

Then I started to pay more attention to what everyone else was generating. Because the whole thing takes place on a Discord server, you can scroll through the images which other users are creating in real time. If you get a moment to try this out, it’s almost as interesting as watching your own commands render into images. Some users enter detailed and complex commands such as “Red-haired Ophelia trapped in dust clouds, aggressive bears, sadness pouring out of innocent eyes, injured, in a boat, on a lake in the bushes, 3D, epic scene, highly detailed, octane rendering, 8k”, whereas other users type things like “a house made out of spaghetti bolognese”:

The more I watched, the more interested I became in the simple commands, as opposed to the detailed ones - this is because, I reasoned, it gave the artificial intelligence more licence to “imagine”. The more I describe, the less true bias is pulled from the visual data sets. For example, I could generate “American waving flag with trump hat”, and prescribe the scene, or I could just generate “American” and see what the data sets generate.

So to my mind the next logical step was to generate “God” and see how the data sets were biassed. As you can see from the four resulting images, they are pretty Abrahamic with haloed figures appearing to be masculine with their beards and bare chests. The light pours down onto them from above, and we have a sense that they may be quite elderly with their receding hairlines or bald heads, and two with white hair. The bottom two stand cruciform in the classical posture of Jesus showing his stigmata, and the top-right image dons a crucifix from light. So what does this say about the Midjourney dataset? Well in this instance it’s definitely very Christian oriented - but it’s important to remember that I did write the word “God“ in English - I would have had very different results if I wrote it in Khmer, Hindi or Arabic.

“God” - Made with Midjourney

Next I played with nationality, generating “English person” (left), “American person” (middle) and “Korean person” (right):

The term “English person” generated figures located somewhere between a 1970s school boys and a Victorian middle-aged choir member. All have narrow shoulders, look fairly pale and sickly and either have lots of hair, or no hair at all. Not really sure what to say about the floating-bush headmaster in the top-right. They all look very male to me.

The “American person” is also very masculine looking in all forms. I love the way that the red, white and blue of the flag are incorporated into each portrait; the glasses, face paint, t-shirt, shirt and scarf. They all look like retired presidents, except for the bottom-right, who looks like a retired rock-star. They are certainly of a similar age.

The term “Korean person” generated fairly middle-aged and androgynous characters also. The pastel colours feel a lot more Korean to me, and the clothing style is closer to a traditional hanbok. Interestingly, across all three tests, the bottom-right figures have a similar haircut.

“Person” - Made with Midjourney

Finally I just searched for “person”. As you can see, they are all quite caucasian, except for the top-right, which appears to be obscured in darkness. This could be because my search term was in English. They are all quite androgenous. I’m most interested in the bottom-left which appears to have a clump of hair shaved from their head, standing in fog as car head-lights approach them. Make of it what you will.

Looking through the rendered images of other users there are also some mind-numbing things. One user relentlessly generated pictures of existing car models for the better part of five hours, generating hundreds of images which clogged up the feed. Another generated pictures of star wars characters inside star wars snow globes over, and over, and over. Another generated at least twenty images of Captain Kirk, and then pictures of sexy cats intermittently. Perhaps this insight can remind us that this is just a machine, and cannot differentiate the way that humans can.

So, can robots replace human artists? At first I was really freaked out, I mean, this can certainly wipe out a huge number of “concept image” jobs. But as I scrolled it became apparent that the term “in the style of ___(Picasso / Kahlo / Van Gogh / insert iconic artist here)___” was almost always present. This reminds me that the machines cannot necessarily create new and original styles, but can only mash existing things together. So I guess every artist needs a unique and ground-breaking style to be safe. Easy, lol.

My final image was “Boris looking sad at the job centre.” Bye Boris :)